What is an Exam Blueprint?

Testing is a complex subject area. We’ve spent some time this year explaining the basics of Cut Scores and Exam Validity to help EMS educators ensure the tests they’re administering provide good evidence of the student’s knowledge. But how do we know whether our tests measure the domains of knowledge we want to evaluate? The answer is in an evidence-based testing blueprint.

Testing is a complex subject area. We’ve spent some time this year explaining the basics of Cut Scores and Exam Validity to help EMS educators ensure the tests they’re administering provide good evidence of the student’s knowledge. But how do we know whether our tests measure the domains of knowledge we want to evaluate? The answer is in an evidence-based testing blueprint.

Start at the Beginning

Testing should never be an afterthought in education. Although a summative or final exam is administered toward the end of a course, it should be used to align the curriculum before the course begins. Developing a blueprint based on educational priorities allows you to prioritize what is taught. It also ensures you have spent enough time covering the tested material in class or lab, or through homework. Before beginning the blueprint, you need to know what type of exam you’re creating. A formative exam (a quiz or unit test administered during the course) should have a minimum of 60 items. A summative exam, which is also called a final exam, has ideally between 150 and 200 items.

A good start can be to look at a practice analysis and the U.S. Department of Transportation’s National Emergency Medical Services Education Standards. Keep in mind that the National Registry of EMTs balances their exams every five years from a practice analysis and not from the DOT objectives. If you’ve recertified and filled out a survey about patient encounters, you’ve help the NREMT design an evidence-based blueprint. Fisdap’s exams reflect the same practice analysis.

Map out Your Topic Areas

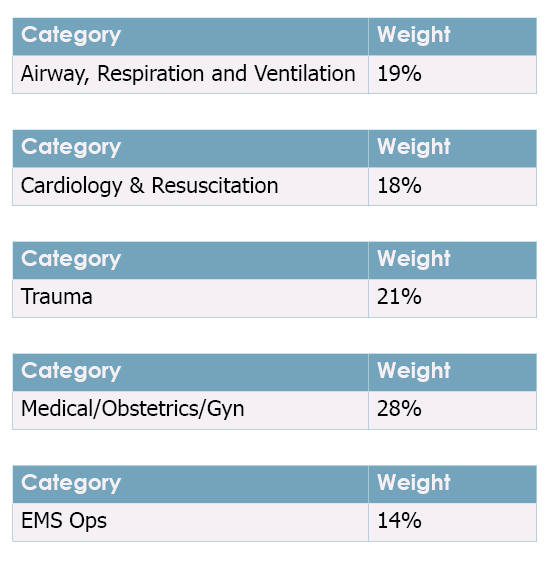

Once you have a good idea of what content you will be testing, you will want to divide the exam into three to seven roughly balanced, high-level topics. Each of these topics should contain between 10 and 15 subtopics. You then want to determine what percentage of test items each topic will contain. This is the topic’s weight in the test. Because practice analyses show that 85% of patient encounters are adult and 15% are pediatrics, a test blueprint should reflect those population ages.

For example, a 200-item summative exam with five topics could have the following weight distribution:

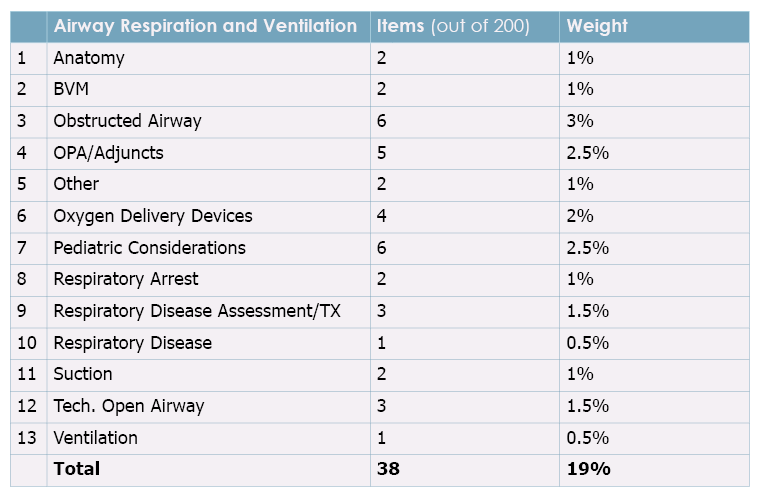

Zooming into the “Airway, Respiration and Ventilation” topic, your subtopics might look something like the below:

Note that with six questions under “Pediatric Considerations,” we are ensuring that 15% of this exam topic addresses pediatric populations.

Determine Cognitive Rigor

Once we’ve broken down the categories into subtopics, we want to make sure the items we’re including in the exam are balanced with knowledge and critical-thinking concepts to evaluate students’ overall abilities at the level we’re expecting them to perform. Fisdap uses a simplified Bloom’s Taxonomy that categorizes items as evaluating knowledge, application or problem solving abilities.

There is no definitive answer regarding distribution of the items in your exam based on cognitive rigor. However, you can start out by determining which level of expertise you expect from students who will pass the exam. When making this determination, you should consider the minimally qualified candidate (e.g., the C student rather than the A student). You should also consider whether that student needs to know facts, apply them to situations, or problem solve.

Again, consider the level of exam you’re administering. A student who is minimally qualified to pass a quiz during a course may still be largely functioning in the knowledge domain. A student who is minimally qualified to pass a cardiology course needs to be able to think critically (i.e, apply knowledge) to evaluate a situation. This type of summative exam should require a student to analyze and solve problems, justify interventions, and compose field diagnoses in dynamic environments.

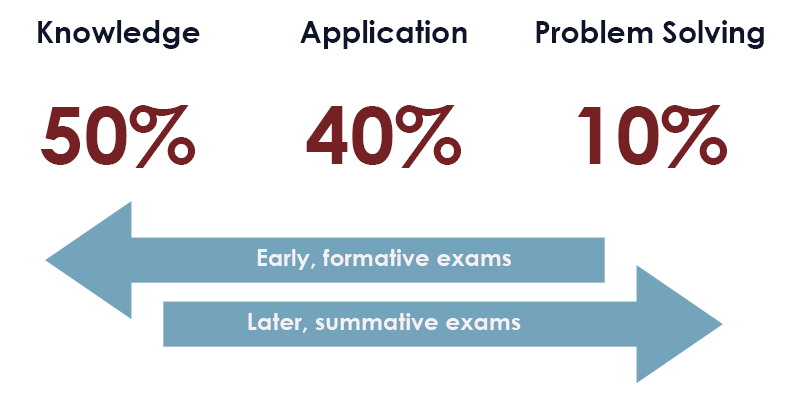

One good guideline is the 50-40-10 rule, which is illustrated below.

You can use these numbers as a baseline and adjust depending on the purpose of the exam.

For quizzes and course exams, keep in mind that the earlier and more frequently a student is exposed to critical thinking items, the better the student will be able to understand and perform at the level expected during the final exam. A final exam should not be the first time a student is exposed to a critical thinking item. By this time, the student should already be familiar with items covering complex topics.

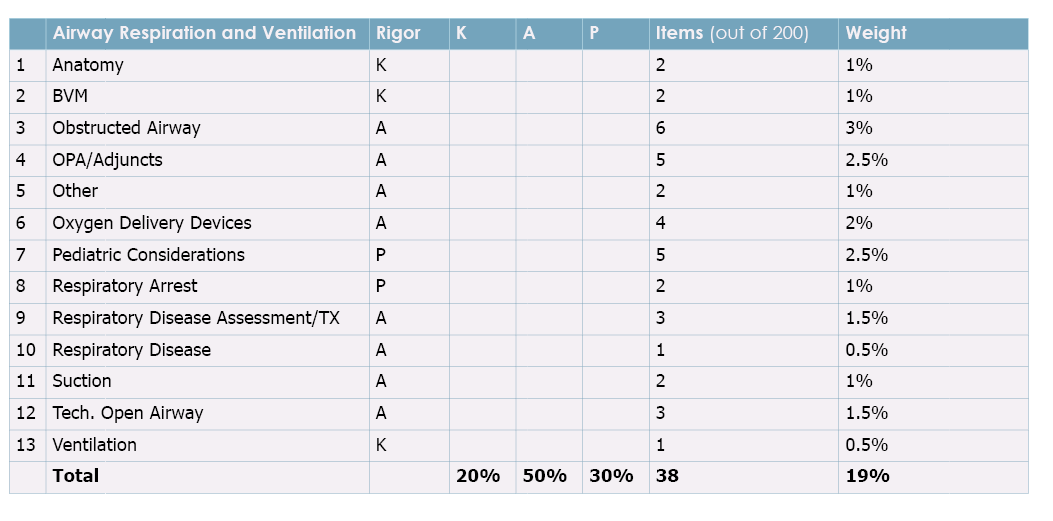

Again, there is no definitive answer to the question of rigor, and it is worthwhile to look at the subtopics within a topic in your exam rather than just at the topic. After determining the rigor of the subtopics and what you would like the overall distribution of the exam to be, your blueprint might have the following breakdown:

Fill in the Items

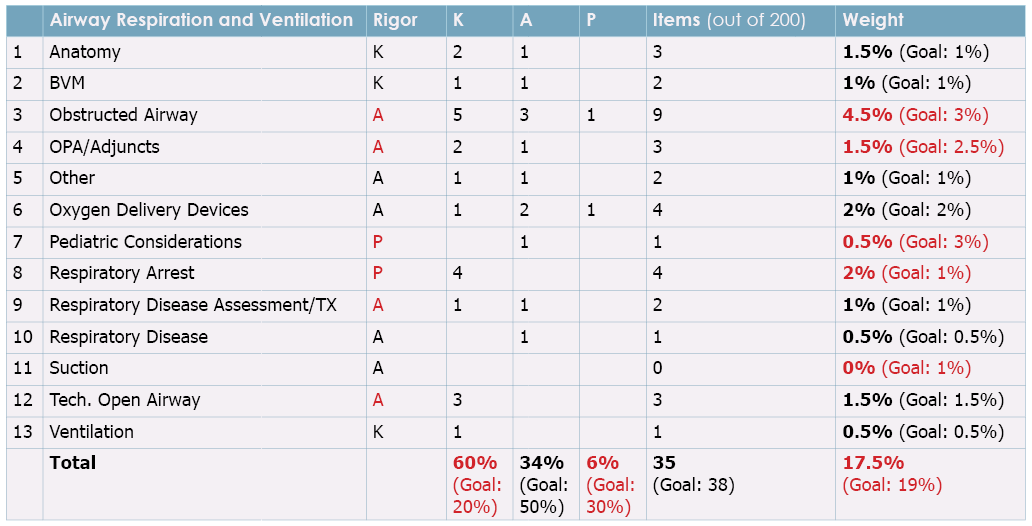

From here, the next and final step is to ensure your test items match the blueprint you’ve established. It’s a good idea to start by placing any test you are current administering (or planning to administer) into your blueprint and comparing your desired distributions with the current distributions. For example, it might look like the following matrix:

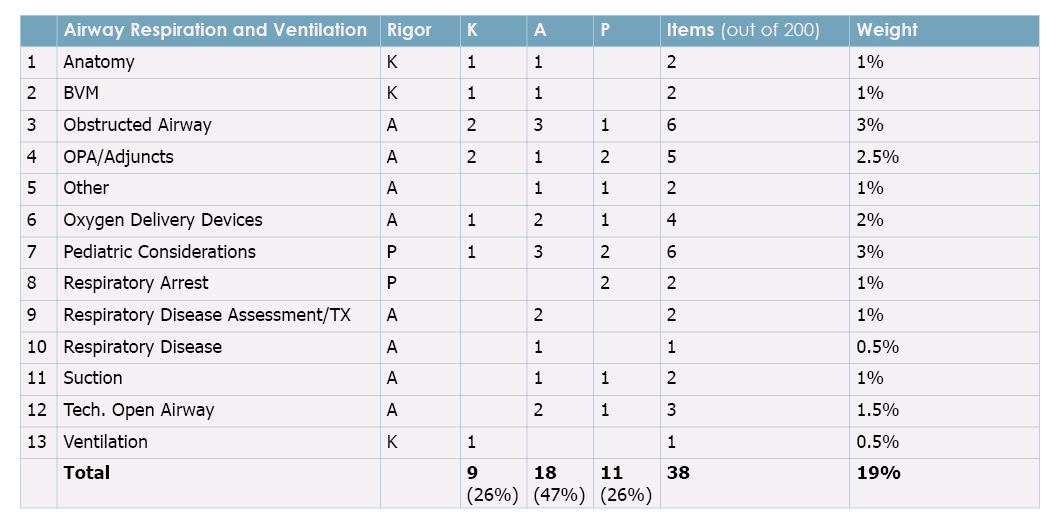

In the above example, the items highlighted in red are significantly different than what you wanted to achieve. This gives you a good idea where you need to start making changes, such as removing and modifying existing items and finding or creating new ones. The following is an example of what that section of the blueprint might look like once you have completed that work:

Conclusion

Creating and validating test items to fill a blueprint can be difficult. However, using a blueprint to determine the types and topics of questions you want to administer and populating your blueprint with items that fit those criteria are important parts of building an exam that will effectively and reliably evaluate your student’s competencies both during and at the end of a course.